Machine learning algorithms vary in size from a few parameters to a few billion parameters (e.g. GPT-3). The training data ranges from a few hundred training row to millions of rows. Training a model on a single CPU is not always efficient and so people started using GPU.

GPU vs CPU vs GPGPU

Wait a minute.. why GPU?

GPU or Graphics processing unit are better at processing data in parallel compared to CPU’s. This quora answer explains the difference in details

But isn’t GPU used for Graphics?

Yes it is, but a lot of graphics required matrix and vector operations and GPU shine at them. Additionally, the industry soon came up with GPGPU or General Purpose Graphics Processing Unit which are GPU’s, but specialised for non-graphical work (or general purpose work) such as matrix and vector maths. GPGPU is not a hardware concept, but a software concept. Subsequently, NVIDIA came up with CUDA or Compute Unified Device Architecture that is used to implement GPGPU. The most popular open source framework for GPGPU is OpenCL.

What else requires a lot of matrix and vector operations?

You guessed it right! Machine Learning Training! If you need more information on which GPU to use for your local machine learning training then refer this article.

What is Volta and Tensor Core?

We heard about CUDA earlier which is an architecture for GPGPU. Nvidia then came up with another architecture called Volta and it featured tensor cores which performed better than CUDA cores. For more details on tensor flow and how they improve over CUDA check this Nvidia article. This section would be incomplete if I don’t introduce the Nvidia Deep Learning libraries.

Nvidia Deep Learning libraries – cuDNN, NCCL and cuBLAS

cuBLAS provides GPU accelerated implementation of basic linear Algebra Subroutines (BLAS). cuBLASMg is an extension that provides multi GPU matrix matrix multiplication.

cuDNN provides GPU accelerated implementation of deep neural network primitives library. It provides optimized and tuned implementations of forward and backward convolution, pooling, normalization and activation layers. cuDNN is supported in Volta architecture

NCCL or Nvidia Collective Communications Library provides communication primitives for inter GPU and inter node communication, optimized for Nvidia GPUs. It provides routines such as all-gather, all-reduce, broadcast, reduce and reduce-scatter

Machine Learning hardware on AWS

| Lifecycle | Hardware | Description |

| Training | EC2 instances powered by AWS Trainium (coming soon) | AWS Trainium is the second chip developed by AWS and is used for training Machine Learning models. It provides the highest performance with most number of Teraflops (TFLOP) of compute power in the cloud. |

| Training | EC2 instances powered by Habana Gaudi (coming soon) | EC2 instances that use the Gaudi Accelerators from Intel Habana labs |

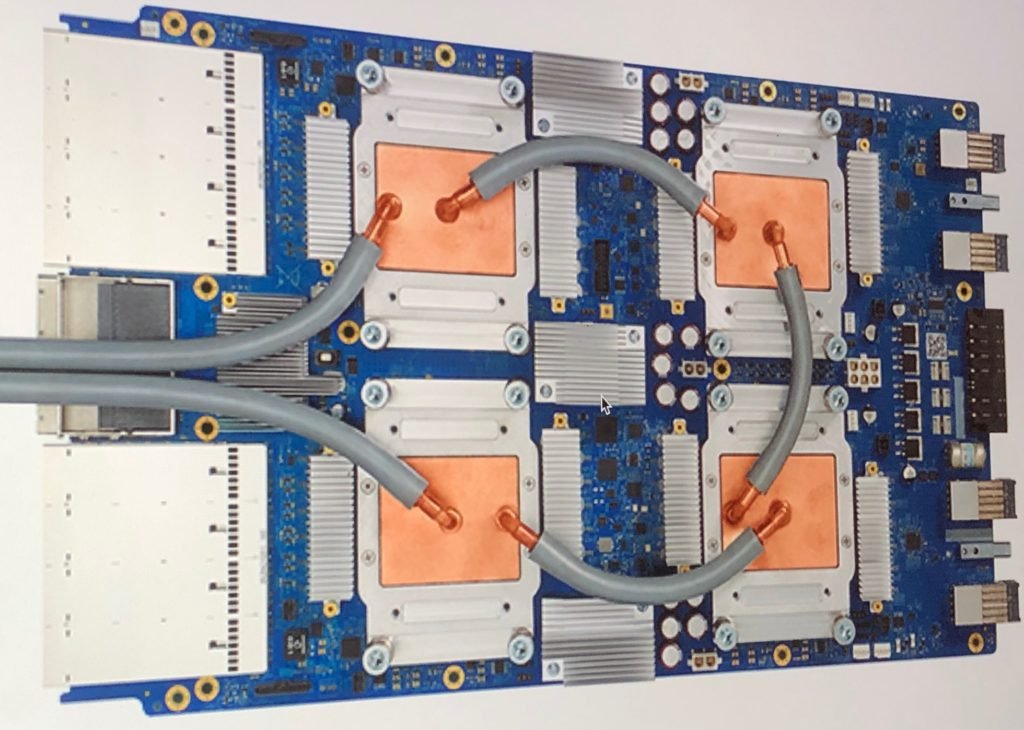

| Training | EC2 P4 instances | 8 NVIDIA A100 Tensor Core GPUs, 400 Gbps instance networking, and support for Elastic Fabric Adapter (EFA) with NVIDIA GPUDirect RDMA (remote direct memory access). |

| Training | EC2 G4 instances | G4dn instances feature NVIDIA T4 GPUs and custom Intel Cascade Lake CPUs. G4ad instances feature the latest AMD Radeon Pro V520 GPUs and 2nd generation AMD EPYC processors. |

| Inference | Amazon EC2 Inf1 instances | Contains upto 16 AWS Inferentia chips. AWS Inferentia is AWS’s first custom designed silicon chip. Each chip supports 128 TOPS (trillions of operations per second) |

Machine Learning hardware on Google

Before we look at the hardware….

Application-specific integrated circuit (ASIC) and Field Programmable gate array (FPGA)

ASIC is an integrated circuit that is built for a specific purpose in contrast to a chip that is built for general use. FPGA are integrated circuit that can be configured by customers and designers after manufacturing. FPGAs are generally used for prototyping and devices with low production volume whereas ASIC is used for very large production volume.

AI accelerator

AI accelerator is a class of specialized hardware accelerator designed for Artificial Intelligence system especially artificial neural network and machine learning.

Google Cloud TPU (Tensor Processing Unit)

TPU is an AI accelerator ASIC chip built by google for machine learning training and inference and optimized for the TensorFlow library. PUs are well suited for CNNs while GPUs have benefits for some fully-connected neural networks, and CPUs can have advantages for RNNs.

Google provides other GPU options such as NVIDIA K80, P100, P4, T4, V100, and A100 GPUs.

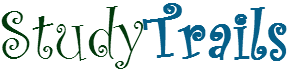

NVIDIA Tesla and Ampere

Nvidia Tesla is a class of products targeted at GPGPU. Because of the confusion in the name they stopped the product line and branded them Nvidia Data center GPUs and Ampere A100 GPU is on of them. The specification for the chips can be found here. The recommended Nvidia GPU products on AWS are specified here.

AMD Radeon Instinct

AMD Radeon Instinct is AMD’s deep learning GPUs and competes with Nvidia Tesla and Xeon Phi. Since MI100 introduction in November 2020, the Radeon Instinct family is known as AMD Instinct, dropping Radeon brand from its name.

In the next article in the series we will look at architectures for distributed computing and the concepts we learnt in this post will be useful there.