The Active Brain: How Google’s “ATLAS” Rewires Itself to Master Infinite Context

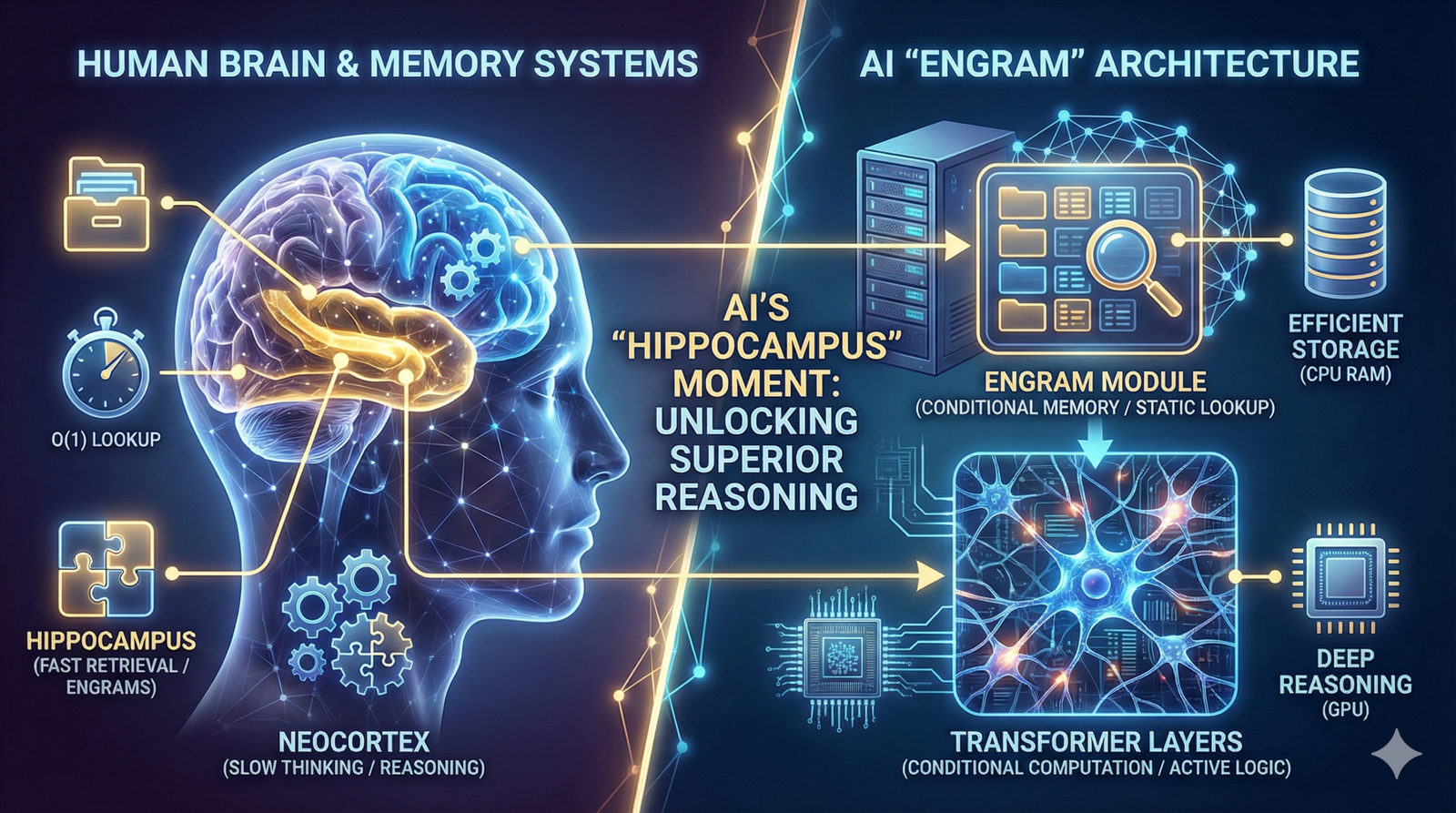

In my last article, we explored how DeepSeek’s Engram effectively gave AI a hippocampus—offloading static facts into a massive, efficient lookup table. It was a breakthrough in separating memory from reasoning. But what if the model didn’t just “look up” memories? What if it actually rewired its own brain while it was reading, optimizing its … Read more